What is interesting in the deep learning ecosystem is the plentiful choices of deep learning frameworks. On the other side, of course there is another equation; more options equate to more confusion, especially in choosing the most appropriate framework for the entire gamut of the problems. At the end of the day, instead of using one, we may need to stick with multiple deep learning frameworks with each usage depending on the nature of the problem to solve.

What is interesting in the deep learning ecosystem is the plentiful choices of deep learning frameworks. On the other side, of course there is another equation; more options equate to more confusion, especially in choosing the most appropriate framework for the entire gamut of the problems. At the end of the day, instead of using one, we may need to stick with multiple deep learning frameworks with each usage depending on the nature of the problem to solve.

TensorFlow is one of the popular (de facto most popular in terms of Github stars) deep learning frameworks. TensorFlow comes with excellent documentation. This also includes the documentation for installation. If you go to the official documentation page for installation, you will be provided with elaborate installation guide for multiple OS platforms. Then why this post?

The latest version of TensorFlow with GPU support (version 1.8 at the time this post is published) is built against CUDA 9.0. However, NVIDIA has released CUDA 9.1 and there is possibility of newer version release in the near future. Given that TensorFlow is lagging behind the CUDA GA version, the publicly released TensorFlow bundle cannot immediately work on the system having only the latest CUDA version installed. A remedy for this is by installing from source, which can be non-trivial especially for those who are not so familiar with the source build mechanism.

The final system setup after completing the installation steps explained in the posts will be as follows.

| Item | Value |

|---|---|

| OS | Ubuntu 16.04 |

| NVIDIA driver version | 390.48 |

| CUDA version | 9.1 |

| cuDNN version | 7.1.3 |

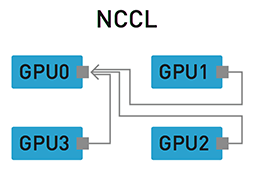

| NCCL version | 2.1.15 |

| Python version | 2.7.12 |

| Python install method | virtualenv |

| TensorFlow version | 1.8.0 |

Note that the components will be updated in the future. This implies version upgrade for the components. It is expected that this post will still be valid even after version upgrade. Under the circumstances where this post becomes invalid, the content will be updated or another post will be written. Yet, this would be realized with sufficient comments or feedback regarding existing content. Continue reading